Overview

You are a robot, but like the scarecrow in the Wizard of Oz, you have no brain. John the human wants to change that, so he filled your brain with a model of a fire engine.

But John also wants you to identify the fire engine by knowing the components that comprise it, so he provides you with this knowledge.

In addition, he provides you with information as to other variations of the fire engine vehicle, meaning that if the parts do not entirely match that of a fire engine, the components may be more closely matched to that of an ambulance or possibly some other type of vehicle.

AI is Learning

The basic premise behind AI is to create algorithms (computer programs) that can scan unknown data and compare it to data that it is already familiar with. So let’s start by looking at another example.

The AI Mindset

Is this a fork or a spoon? Is it a knife? Well, they both have handles, but this one has spikes. Let me look up what pieces of information I have in my database that look like this item. Oh, I have a piece that resembles this spike pattern, so it must be a fork!

AI algorithms scan the unknown data’s characteristics, called patterns. It then matches those patterns to data it already has recognized, called pattern recognition. The data it recognizes is called labeled data or training data and the complete set of this labeled data is called the dataset. The result is that it decides as to what that unknown item is.

The patterns within the dataset are called data points, also called input points. This whole process of scanning, comparing, and determining is called machine learning. (There are seven steps involved in machine learning and we will touch upon those steps in our Artificial Intelligence 102 article).

For example, if you are going to write a computer program that will allow you to draw a kitchen on the screen, you would need a dataset that contains data points that make up the different items in the kitchen; such as a stove, fridge, sink, as well as utensils to name a few; hence our analysis of the fork in the image above.

Note: The more information (data points) that is input into the dataset, the more precise its algorithm’s determination will be.

Now, let’s go a bit deeper into how a computer program is written.

Writing the Computer Program

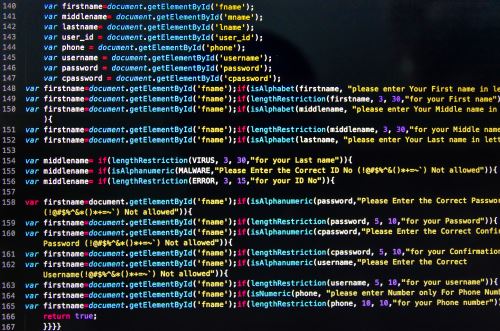

We spoke about how computers are programmed using instructions in our bits and bytes article, but as a refresher, let’s recap!

Computer programs, called algorithms tell the computer to do things by reading instructions that a human programmer has entered. One of our examples was a program that distributes funds to an ATM recipient. It was programmed to distribute the funds if there was enough money in the person’s account and not if there wasn’t.

But THIS IS NOT AI since the instructions are specific and there are no variations to decide anything other than “if this, then that”.

In other words, the same situation will occur over and over with only two results. There is no determination that there may be more issues, such as the potential for fraudulent activity.

Bottom line – There is no learning involved.

Writing a Learning Program

The ATM example is limited to two options, but AI is much more intensive than that. It is used to scan thousands of items of data to determine a conclusion.

How Netflix Does It

Did you ever wonder how Netflix shows you movies or TV shows that are tuned to your interests? It does this by examining what your preferences are based on your previous viewings.

The algorithm analyzes large amounts of data, including user preferences, viewing history, ratings, and other relevant information to make personalized recommendations for each user.

It employs machine learning to predict which movies or TV shows the user is likely to enjoy.

It identifies patterns and similarities between users with similar tastes and suggests content that has been positively received by those users but hasn’t been watched by the current user.

For example, if a user has watched science fiction movies, the recommendation might be to suggest other sci-fi films or TV shows that are popular among those users with similar preferences.

The program will learn and adapt as the user continues to interact with the platform, incorporating feedback from their ratings and viewings to refine future recommendations.

By leveraging machine learning, streaming platforms like Netflix can significantly enhance the user experience by providing tailored recommendations, increasing user engagement, and improving customer satisfaction.

This can’t be done using the non-learning ‘if-else’ program we previously spoke about in the ATM example.

A Gmail AI Example

As you type your email, Google reads it and then offers words to accompany the sentence that would coincide with what you are about to type before you have even typed it.

This is called language modeling which uses the Natural Language Process (NPL) model.

In NLP, the algorithm uses a factor of probability that is designed to predict the most likely next word in a sentence based on the previous entry.

AI algorithms feed on data to learn new things.

The more data (data points) that exist, the easier it will be for the model to identify the patterns of an unknown entity.

AI: How it All Works

There are three main types of machine learning: supervised learning, unsupervised learning, and reinforcement learning.

Click CC above for closed caption

Supervised Learning

This is the most common type of machine learning. It involves feeding a computer a large amount of data to enable it to recognize patterns from the labeled dataset and make predictions when confronted with new data.

In other words, supervised learning consists of training a computer program to read from a data sample (dataset) to identify what the unknown data is.

How the Machine Thinks with Supervised Learning

Show and Tell: A human labels a dataset with data points that identify the sample set to be a building.

Then the human does the same thing to identify a bridge. This is another classification different from the building classification and is identified with specific patterns that make up a bridge.

The program takes note of the patterns of each classification. If computer instructions were written in plain English, this is what it would say:

This is a bridge. Look at the patterns that make up the bridge. And this is a building. Look at the patterns that make up the building. I can see distinguishable differences in the characteristics between the two. Let me match them up to the unknown data and make a decision on whether this new data is a bridge or a building.

Supervised learning is used in many applications such as image recognition, speech recognition, and natural language processing.

Supervised learning uses a data sample to compare unknown data. The data sample is called a data model.

It’s Raining Cats and Dogs

A supervised learning algorithm could be trained using a set of images called “cats” and “dogs”, and each cat and dog are labeled with data points that distinguish each.

The program would be designed to learn the difference between the animals by using pattern recognition as it scans each image.

A computer instruction (in simplified terms) might be “If you see a pattern of thin lines from the face (whiskers), then this is a cat”.

The result would be that the program would be able to make a distinction of whether the new image it is presented with is that of a cat or dog!

This type of learning involves two categories – cats and dogs. When only two classifications are involved, it is called Binary Classification.

Supervised Learning Usining Multi Classifications

An Example

Suppose you are studying insects and you want to separate flying insects from crawling ones. Well, that’s easy. You take a bug that you found in your backyard and compare it to the ant and fly you already stored on your insect board. In AI terms, this is supervised binary classification.

You immediately know, based on the pattern configuration of the insect which classification it belongs to – the crawlers or the flies. Now you grab more flies and put them in the fly category and do the same with the creepy crawlers for their category.

Let’s say you want to go deeper in the fly classification and find out what type of fly it is, (e.g. house fly, horse fly, fruit fly, horn fly, etc.); but you only have two classifications to compare them two – flies and crawlers, so what do we do? You create more classifications for the fly class.

This is multi-classifications, or more technically called multi-class classifications, which provide additional labeled classes for the algorithm to compare the new data to.

We will delve more into multi-class classifications and how this works in our next article, but for now, just know what binary classifications and multi-class clarifications are.

Unsupervised Learning

Unsupervised learning involves training a computer program without providing any labels or markings to the data. The aim is to enable the program to find (learn) patterns and relationships on its own.

It does this by reading millions of pieces of information and grouping them into categories based on their characteristics or patterns, then making decisions on what the new entity is by matching it up to one of those categories.

In other words, it matches patterns of the unknown data to the groups it created and then labels them without human intervention. This is called clustering.

Anomaly detection is the task of identifying data points that are unusual or different from the rest of the data. This can be useful for tasks such as fraud detection and quality control.

Reinforcement Learning

Reinforcement learning (RL) learns by trial and error, receiving feedback in the form of rewards or penalties for their actions. Any negative number that gets assigned means it is punished.

The higher the negative number, the more the algorithm will learn not to pursue that particular circumstance and will subsequently try again until positive numbers are assigned, called a reward. It will continue this process until it is properly rewarded. The goal of RL is to maximize its rewards over time by finding a sequence of actions that leads to the highest possible reward.

One of the defining features of RL is the use of a feedback loop in which the agent’s actions (an agent is the decision-making unit that is responsible for choosing actions in the environment that was provided to it). The loop permits the agent to learn from experience and adjust its behavior accordingly.

The feedback loop works as follows:

- The agent takes an action in its environment.

- The environment provides the agent with feedback about the action, such as a reward or punishment.

- The agent then updates its policy based on the feedback.

- The agent will repeat steps 1-3 until it learns to take actions that lead to desired outcomes (rewards).

RL has been applied to a wide range of problems, such as games, robotics, and autonomous driving. It is particularly useful in scenarios where the action may not be immediately clear and where exploration is necessary to find the best solution.

Conclusion

Overall, these AI methods are widely used in various industries and applications. We will continue to see growth and development as artificial intelligence technology advances.

What are the advances or dangers that AI can bring to the future? Read our article on the Pros and Cons of AI to find out.

Machine Language Terms to Know

- Computer Instruction

- Computer Program

- Algorithm

- Data Points

- Patterns

- Labeled Data

- Dataset

- Data Model

- Pattern Recognition

- Machine Learning

- Binary Classification

- Multiclass Classification

- Supervised Learning

- Unsupervised Learning

- Reinforced Learning