AI Review

In our Artificial Intelligence 101 article, we spoke about binary classification with supervised learning using the fly example. Then we discussed the limitations of this type of clarification because it has only two data sets to compare with the unknown data.

In the case of the fly example, we are only able to determine if it is a flying or a crawling insect. If we want to get more precise, such as determining what type of fly it is, we need to acquire more categories of labeled data. This is called multiclass classification.

As we proceed with the multiclass classifications, we are also going to delve into the types of models that are used for this process, but before we begin, let’s clarify a couple of AI terms so that everything is clear, starting with data points which we scratched the surface within our AI 101 article.

What is a Data Point?

A data point is a specific attribute that is input into the machine learning algorithm (AKA the model). It is a component that is part of a complete unit. The more data points there are, the more precise the model will be in its conclusion.

What is a Dataset?

A dataset is a collection of data points. A data set can contain any number of data points, from a few to billions.

Data Point and Dataset Usages

Our fly example is a representation of AI data points and datasets, but in the real world, these factors work for a large variety of conditions. Below are just a few of them.

- Financial predictions

- Using a self-driving car

- Facial recognition

- Medical diagnosis models

- Agriculture

- Predictions for better sales

- Fraud detection systems

- A customer service chatbot

Together, the algorithm reads the unknown data points that are given to it and compares those data points to the labeled model The more data points that are supplied, the more accurate the model will be.

Now, let’s look at the AI models that are available.

Honor Thy Neighbor! The K-Nearest Neighbor Model

One of the models is called K-Nearest Neighbor (KNN). This algorithm will look at the unknown piece of data and compare it to the marked data. This is nothing new. We learned about this in our previous lesson on supervised learning, but now the comparisons will be matched against more than two classes.

In our fly example, let’s create classes that will include four types of flies: house fly, horse fly, fruit fly and horn fly. Each one of these flies have specific characteristics or patterns of data points that distinguish them from the other classes.

Example 1: Imagine you have a big puzzle with different pieces. Each piece of the puzzle represents a data point. Just like how each puzzle piece is unique and contributes to the overall picture, a data point is a single piece of information or observation that helps us understand or solve a problem.

Example 2: Let’s say we want to know the favorite color of each student in a class. Each student’s favorite color is a data point. We can collect all these data points to find patterns or make conclusions about the class’s preferences.

In simpler terms, a data point is like a puzzle piece that provides us with a small part of the whole picture or information we are trying to understand. By putting all the data points together, we can learn more about a situation, solve problems, or make decisions based on the available information.

In other words: A data point is a small piece of information or a single example that helps us understand or learn about a larger group or class of things. It’s like having one item or measurement from a collection that represents the whole group.

The k-nearest neighbors (KNN) algorithm uses data points of specific marked classes to compare to the unknown (given) data. The more data points of a specific class, the more likely the unknown data will match that class.

The algorithm will scan the data points of the unknown fly and ask itself which known fly category looks to be the closest neighbor to the unknown fly? Technically speaking, which set of data points of a specific class is the closest match to the set of data points to the unknown data? Looking at it in reverse, which class is the most distant match to the unknown data?

This is the KNN process, which finds the closest pattern of data points of the unknown data. The more accurate the data points that match the unknown data, called votes, the better of a match you have, and those classes will be its closest neighbors.

Another way of explaining KNN is once the K nearest neighbors are identified, the unknown data point is assigned the class label that is most prevalent among its neighbors. This means that the majority class among the k nearest neighbors determines the classification of the unknown data point.

But How Do We Measure These Distances?

Do the Math

Math is used (don’t worry. It is simply high school math) to determine which neighbors are the closest in proximity to the unknown data and those neighbors are designated by the letter K.

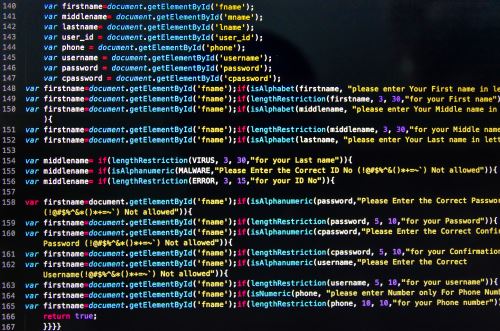

The math that is used is the distance between two points. If you don’t remember how to calculate the distance between two points, you can go to this refresher course. This procedure is called the Euclidean Distance and the computer instructions are based upon this concept.

So the data points that match the unknown data get more votes and subsequently are given a number that represents the distance to the unknown entity. The lower the number, the closer the data class resembles the unknown.

To relate Euclidean Distance to our fly example, it would mean what fly category has the line with the least distance to the unknown fly.

The KNN algorithm is based on the concept that similar things exist in close proximity, so the best match would be those where the lines in the graph are the shortest distance.

What is a Predictor?

A predictor is the output that an algorithm releases based on a learned dataset that it uses to make further predictions.

The Regression Model

This algorithm is a supervised learning model used when future predictions are required. It takes the input data, also known here as independent variables and makes predictions based on the patterns it sees from what it learned from the dataset. In other words, Regression models are trained on a dataset of historical data. The model learns the relationship between the independent and dependent variables from the data. Then it can be used to predict the value of the dependent variable for new data points.

Conclusion

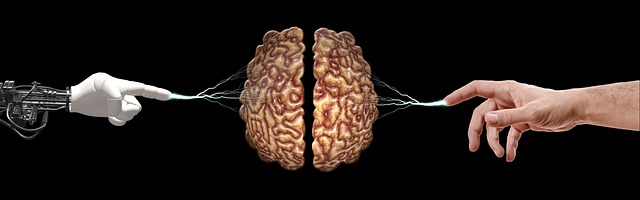

- A major advantage of AI lies in its ability to improve efficiency. Similar to the Industrial Revolution, AI is streamlining the manufacturing process, increasing productivity and reducing human error.

- Artificial Intelligence enhances decision-making through data analysis and predictive capabilities. In healthcare, AI can analyze a vast amount of medical datasets, aiding doctors in diagnosing diseases and suggesting treatment plans. Financial institutions rely on AI for fraud detection, increasing security and efficiency. and governments use machine learning to predict criminal activities and allocate resources for improved public safety.

- Machine learning algorithms can generate art, compose music, and write literature. In design and engineering, it assists in more efficient and aesthetically pleasing products.

- AI is expediting scientific research by rapidly analyzing extensive datasets, accelerating discoveries in genomics, drug development, and climate science.

- This technology also holds promise in addressing global challenges such as in agriculture, where it can enhance crop yields. Disaster prediction and response are also improved through AI analytics.

- Natural Language Processing (NPL) gives us voice recognition that enables better interaction with digital devices, especially for people with disabilities.

As AI continues to advance, the potential to reshape industries and improve the quality of life for people around the world is extremely promising, but we must ensure that the utilization of machine learning does not fall into the wrong hands. Ethical considerations and responsible development must remain at the forefront so that artificial intelligence benefits are harnessed responsibly and equitably throughout the world!