The original franchise was not just a great sci-fi series. It catalyzed innovation, inspiration, and collaboration for people worldwide. Concepts such as warp drive, transporter technology, and deflector shields have sparked discussion, and terms such as dilithium crystals initiated conversations. Let’s take a look at what Star Trek has done for space exploration and society as a whole.

Inspiring Future Generations

The franchise ran from 1966-1969 and became a milestone in ingenuity. Many people looked to the skies, wondering what was out there and how they could be part of the science that would lead them and others to space exploration.

Star Trek was and still is an inspiration to countless individuals seeking careers in science, technology, engineering, mathematics (STEM), and of course, space exploration. Many astronauts, scientists, engineers, and space enthusiasts have cited Star Trek as a formative influence on their interest in space and their decision to pursue careers in related fields.

Fans were fascinated as they watched the Enterprise crew encounter new civilizations. Some were more advanced and some whose evolution was considered primitive by 23rd century Earth standards. That is where the Prime Directive came into play. A central tenet of Starfleet and the United Federation of Planets’ principle prohibits interference with the internal development of alien civilizations, particularly pre-warp ones, meaning they have not yet developed the capability for faster-than-light travel.

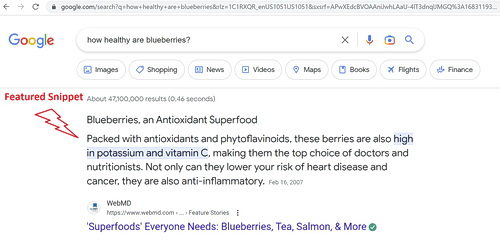

The stories captivated audiences as to how the crew would struggle to maintain the directive when encountering obstacles they had to overcome. One of the most enduring encounters was in “The City on the Edge of Forever” where Dr. McCoy accidentally went back in time and somehow changed Earth’s history. It was up to Kirk and Spock to find McCoy and reverse what he did. Shatner mentioned this episode as his favorite during an interview with renowned astrophysicist Neil Degrasse Tyson in what is believed to be Tyson’s office at the Museum of Natural History in New York.

It wasn’t just the prime directive the Enterprise crew had to deal with. There were also villainous civilizations that found Starfleet to be a threat to them, namely the Klingons as well as other aliens such as the Gorns – a reptilian humanoid species who Kirk was forced to fight on an alien planet in the episode “Arena“.

The crew had numerous encounters with the Klingons. In “Day of the Dove,” the crew becomes embroiled in a conflict with Klingons, controlled by a mysterious entity that thrives on hatred and conflict.

The Klingons were always a thorn in the side of Star Fleet; however, that changed in the Next Generation when a peace treaty was consummated with Lt. Commander Worf as the security officer on the bridge. But we’ll venture into Captain Picard’s universe in a separate article, focusing only on the original series here.

Overall, “Star Trek” is a cultural phenomenon that has inspired generations of fans by exploring bold ideas, emphasizing inclusivity and diversity, and envisioning a future where humanity’s potential knows no bounds.

The Characters

A Lesson in Diversity

The show’s optimistic vision of humanity’s future in the 23rd century depicted people from diverse backgrounds working together. This resonated with audiences worldwide and represented a peaceful coexistence between all parties back on Earth.

Lieutenant Uhura

Most notably, in the 10th episode of the third season titled “Plato’s Stepchildren,” Captain Kirk (William Shatner) kissing Lieutenant Uhura (Nichelle Nichols) broke barriers that echoed across the country as the first interracial kiss on TV, and still today, it is looked upon as a milestone that has advanced racial relations in TV and across all media platforms.

Nichols was the first African-American woman to play a lead role on television as Communication Officer Lieutenant Uhura. She met Dr. Martin Luther King, who was inspired by her character on Star Trek and said to her, “Do you not understand what God has given you? … You have the first important non-traditional role, a non-stereotypical role. … You cannot abdicate your position. You are changing the minds of people across the world, because for the first time, through you, we see ourselves and what can be.”

Chekov

Star Trek was aired during the Cold War, and the Russian national Chekov, played by Walter Koenig, no doubt sent a clear message that there was mutual peace between the US and the Soviet Union in the 23rd century. Interestingly enough, this did become a reality after the collapse of the Soviet regime in 1991.

Unfortunately, recent current events are proving otherwise, as Valdimar Putin’s attack on Ukraine is no doubt reminiscent of past Soviet colonization. Despite this, the International Space Station (ISS) is still strong. It is a collaborative project involving multiple space agencies, including NASA (United States), ESA (European Space Agency), JAXA (Japan Aerospace Exploration Agency), CSA (Canadian Space Agency) and yes, Roscosmos (Russia).

What happens after the Ukraine war remains to be seen, but let’s hope that when the 23rd century arrives, Gene Roddenberry’s view of future world peace becomes a reality.

Lieutenant Sulu

George Takei portrayed Hikaru Sulu in the original Star Trek series and the first six Star Trek films. He served as the helm officer aboard the USS Enterprise under Captain Kirk. He’s known for his calm demeanor and exceptional piloting skills, expertly navigating the Enterprise through dangerous situations.

Although “Hikaru” is a typical Japanese first name, “Sulu” isn’t a Japanese surname. It refers to the Sulu Sea, near the Philippines. This is reportedly what Gene Roddenberry wanted – some ambiguity to his origin. Since Sulu was never referred to as specifically Japanese, it allowed Roddenberry to represent him with a broader Asian background, allowing him to represent all Asians within the entire Star Trek universe.

It is no coincidence that in real life, George Takei is full Japanese and felt the wrath of the American internment camps after the Pearl Harbor attacks. He has since become a vocal advocate for racial and social justice and has talked about his experience at previous Star Trek conventions.

George Takei was born on April 20, 1937, and is 86 years old.

Scotty

Lieutenant Scott, or “Scotty” (James Doohan), as Captain Kirk called him, was the chief engineer of the Enterprise. He is known for his technical expertise and his ability to keep the enterprise running smoothly, even under the most challenging circumstances. Scotty’s catchphrase, “I’m giving her all she’s got, Captain!” has become iconic in popular culture.

His character is depicted as fiercely loyal to the captain and the crew of the Enterprise. He is often portrayed as having a gruff exterior but also demonstrates compassion and camaraderie with his fellow crew members.

Scotty’s loyalty takes control with support from Dr. McCoy

Scott’s background was from Scotland. His strong Scottish accent and colorful expressions are instantly recognizable. In addition to his engineering skills, Scotty is known for his fondness for Scotch whisky, which adds depth to his character and provides moments of levity in the series. The term “Beam me up Scotty” is a common idiom known worldwide.

Dr. McCoy

This author was privileged to meet Nimoy while visiting the Jewish Museum in NYC. I asked him what he thought of the other Star Trek series. He responded, “I think of them as my grandchildren. They come, and then they go away”.

Leonard Nimoy passed away in 2015 from COPD, but his influence on science fiction and popular culture remains immense, especially among NASA engineers. His mindset and discipline helped bridge the gap between human emotions and logic, enlightening children and adults to think clearly and logically when encountering problems.

Kirk

Captain James Tiberius Kirk, portrayed by William Shatner, is one of the most iconic characters in science fiction history. As the captain of the starship USS Enterprise, his persona became synonymous with strength, leadership, and exploration of the unknown, along with the phrase “Boldly go where no man has gone before“. And boldly, they did travel through the galaxy, encountering new life and civilizations. Some friendly, some not so friendly, and some war-like.

William Shatner always had a passion for space travel. In 1978, he recorded his version of Rocket Man at the Sci-fi Awards. But his passion did not end there. On October 13, 2021, at age 90, he went from fictional to real spaceman aboard billionaire Jeff Besos’s Blue Origin spacecraft and became the oldest human to ever set foot into space.

“I saw a cold, dark, black emptiness. It was unlike any blackness you can see or feel on Earth. It was deep, enveloping, all-encompassing. I turned back toward the light of home. I could see the curvature of Earth, the beige of the desert, the white of the clouds, and the blue of the sky. It was life. Nurturing, sustaining, life. Mother Earth. Gaia. And I was leaving her,” reads an excerpt from “Boldly Go” that was first published in Variety Magazine.”

“I’m so filled with emotion about what just happened. It’s extraordinary, extraordinary. It’s so much larger than me and life. It hasn’t got anything to do with the little green men and the blue orb. It has to do with the enormity, quickness, and suddenness of life and death.”

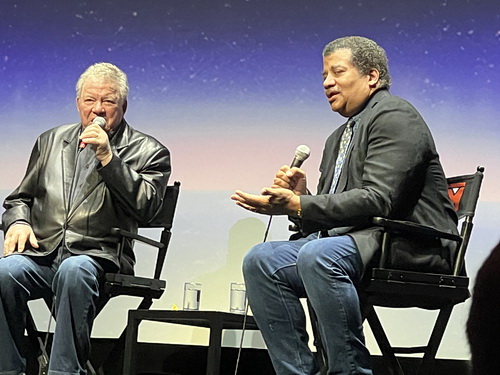

Shatner, at age 93, is still going strong. On March 17, 2024, he was the guest at the Alice Tully Hall in Lincoln Center, NYC, hosted by Neil Degrasse Tyson for a Q&A session after screening his new movie “You Can Call Me Bill.”

Mission Names and Concepts

NASA and other space agencies have drawn inspiration from the show when naming missions or developing mission concepts. The Space Shuttle Enterprise was named after the show. It’s a nod to the franchise, reflecting its enduring influence on the community and its role in shaping the language and imagery of space exploration.

Fueling Public Interest

The series’ portrayal of space travel, alien worlds, and encounters with extraterrestrial life has captured the imagination of millions of people, inspiring them to learn more about the universe. The famous Star Trek conventions and related events have provided forums for enthusiasts to unite, share their passion for space exploration, and engage with real-world space missions.

Shaping Spacecraft Design

The original spacecraft, the USS Enterprise, designated by NCC-1701 (Navel Construction Contract, 17 for Starfleet’s 17th starship design, and 01 – the first of this design series), has influenced real-world spacecraft planning. While the functionality of these fictional vessels may differ from actual spacecraft, their sleek and futuristic designs have inspired engineers to think creatively about spacecraft aesthetics and functionality.

Promoting Scientific Inquiry

Star Trek’s emphasis on exploration has promoted a culture of curiosity and exploration in society. The series’ portrayal of futuristic technologies and scientific concepts has sparked interest in science and encouraged viewers to learn more about the universe and the possibilities of space exploration. Concepts introduced in Star Trek, such as the Prime Directive and the exploration of strange new worlds, have inspired discussions about ethics, philosophy, and humanity’s role in the cosmos.

Inspiring Technological Innovation

Star Trek has inspired the development of numerous technologies that were once considered futuristic but have since become reality or influenced real-world technology development. Examples include cell phones (inspired by the communicators used by Starfleet officers), tablet computers (similar to the PADD devices seen on the show), voice-activated computers (reminiscent of the ship’s computer), medical imaging devices (such as MRI and CT scanners), and more. The franchise’s imaginative depictions of technology have inspired scientists, engineers, and inventors to push the boundaries of what is possible and strive to turn science fiction into reality.

Exploring Moral and Ethical Dilemmas

Star Trek often explores complex moral and ethical dilemmas through its storytelling. Episodes frequently address issues such as the ethics of scientific experimentation, the consequences of war and violence, and the challenges of diplomacy and cooperation between different cultures and species. By tackling these issues thoughtfully and thoughtfully, Star Trek encourages viewers to reflect on their values and beliefs.

Conclusion

Star Trek’s influence on space exploration is profound and far-reaching, extending from its role as a source of inspiration and imagination to its impact on technology, collaboration, and public engagement.

By envisioning a future where humanity explores the cosmos with curiosity, courage, and cooperation, Star Trek has helped shape the collective aspirations and ambitions of the space exploration community. It continues to inspire generations of space enthusiasts worldwide.