Quantum Introduction

The term ‘Quantum Computing’ hasn’t gotten the much-needed traction in the tech world as yet and those that have traversed through this subject might find it a bit confusing, to say the least.

Some experts believe that this is not just the future, but the future of humanity. Quantum theory moves ahead of the binary computer and ventures into the world of computing that resides at the subatomic level.

If you don’t have a clue what we are talking about, you are not alone. Stay with us through this article where we will discuss quantum computing in great detail—what it is—how it will change the tech world and its practical implications (both for better or worse).

Before we usher in the discussion of this potential life-changing advancement, it is necessary to discuss the platform on which quantum computing is based – Quantum theory.

What is Quantum?

Also known as quanta, in simple terms, it represents the minimum amount of energy that can be used within any physical interaction.

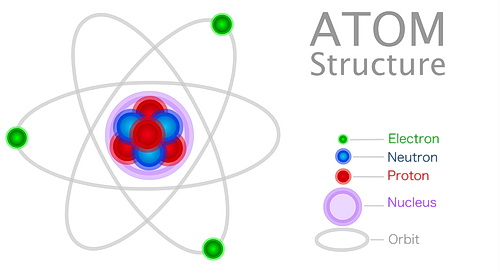

Using examples of particle interaction within the atom, a quantum of light would be a photon, and a quantum of electricity would be an electron. There can be no activity smaller than when these particles have an interaction.

In the Beginning

The industrial revolution of the 20th century was one of the greatest milestones of modern history. From the invention of the automobile to industrial steel, elevators, and aircraft, it gave birth to a plethora of things that now define our civilization and will continue to shape the history of our future.

Enter the 21st century and we are watching a transition from the tangible to the intangible (virtual) world; notably, computer technology, its hardware, software, and the world wide web.

Among the many incredible things that are ensuing during this technological revolution is the colossal development in physics, specifically quantum theory. We will try to keep the explanation of quantum theory as simple as possible to make this as interesting and informative as possible.

Modern Physics

The field of physics is divided into two definite branches: classical and modern. The former branch was established during the period of the Renaissance and continued to progress after that.

Classical physics is based on the ideas by Galileo and Newton. Their concepts are focused on the macroscopic (visible to the naked eye) of the world around us.

Conversely, modern physics is about analyzing matter and energy at microscopic levels.

While we are at it, it is important to clarify that quantum theory doesn’t just refer to one idea or hypothesis. It is a set of several principles. We will discuss them simply and remain focused on the items that are relevant to quantum computing.

-

- The work of physicists Max Plank and Albert Einstein in the 20th century theorized that energy can exist in discrete units called ‘quanta’. This hypothesis contradicts the principle of classical physics which states that energy can only exist in a continuous wave spectrum.

- In the following years, Louis de Broglie extended the theory by suggesting that at microscopic (atomic and subatomic) levels, there is not much difference between matter particles and energy and both of them can act as either particles or waves as per the given condition.

- Lastly, Heisenberg proposed the theory of uncertainty, which entails that the complementary values of a subatomic particle can’t be simultaneously measured to give accurate values.

Neil Bohr’s Interpretation of Quantum Theory: The Primal Basis of Quantum Computing

During the period when quantum theory was extensively discussed among top physicists, Neil Bohr came up with an important interpretation of this theory.

He suggested that light cannot be determined if it is composed of particles or waves, called wave-particle duality until they are particularly found out.

The infamous Schrodinger’s Cat experiment is an easy way to understand this concept. The experiment entails that a cat enclosed in a box with poison could be considered both dead and alive until the box is opened and the cat is observed.

Computer Algorithms

Now, this is the point where the theory demonstrates its potential, but first, a definition of an algorithm – a set of instructions that a computer reads to carry out a function. E..G. You tell the computer to print a document. The computer will read the instructions (algorithm) and perform the printing function.

To understand the quantum-based algorithm, it is essential to understand how contemporary/conventional computing systems work.

Whether it’s a handheld gadget or a supercomputer working in the server room of Google, every current computing device employs the binary language, where every bit of information can exist in either one of two states: 0 or 1 (hence ‘binary’), but not both states at once.

When we discuss quantum algorithms, they follow the idea that any particle-wave system can exist in multiple states at any given time.

This means when data is stored in a quantum system, it can be stored in more than two states. This makes quantum bits (also referred to as ‘qubits’) more powerful than the conventional method of binary computing.

Standard Binary Computing Vs. Quantum Computing

The fact that a quantum bit can exist in multiple states gives quantum computing an edge over conventional binary computing. With the help of a simple example, we will try to demonstrate how quantum theory is superior to its classical counterpart.

Picture a cylindrical rod, and each end of the rod is a bit, labeled 1 or 0. When one side is a 1, then the other side must be a 0.

On the other hand, the quantum bit exists in every possible state simultaneously, between the 1 and 0 together.

The above explanation exhibits that quantum bits can hold an unprecedented amount of information and hence the computing governed by this type of algorithm can exceed the processing of any classical computing machine.

A quantum computer can compute every instance between

0 and 1 simultaneously, called parallel computing.

Quantum Entanglement

Apart from storing more information than classical computers, quantum computing can also implement the principle of entanglement. In simple words, this principle will enable every quantum bit to be processed separately.

Beneficial Uses of Quantum Computing

The processing capabilities of quantum computing make it an ideal machine to carry out many tasks where conventional computers fall short.

Science and Life Sciences

The study of complex atomic and molecular structures and reactions is no easy task. A lot of computing capacity is required to simulate such processes.

For instance, the complete simulation of a molecule as simple as hydrogen is not possible with the available conventional computing technology. So, quantum computing can play a significant role in understanding many of the concealed facts of nature and more particularly, of life. Many chemicals and physical and biological research work previously stalled for years can take off after quantum computers become a reality.

Artificial Intelligence and Machine Learning

Even though scientists have made significant inroads in the area of machine learning and AI with the existing computing resources, quantum computing can help take the giant leap to make a machine as intelligent as human cognition.

Machine learning feeds on big data, which is the processing of humongous databases; in other words, big data contains a colossal amount of information, above and beyond what conventional databases contain. And the more information you have, the more intelligent you become!

With the fast processing of quantum computing, even conventional AI will become obsolete, revamping it into a new and more powerful artificial intelligence.

Improvement of General Optimization Procedures

With the addition of big data, the processing that takes place involves more than just reading information. It also involves the ability to make more decisions.

It is called if/then conditions, meaning if something exists and something else acts on it, what could be the outcome? The conditions use variables to calculate each condition.

So, the more data, the more variables to calculate. Putting it another way, the number of permutations and combinations increases, and thus, the amount of processing power increases. When this happens, the amount of data to be processed can increase exponentially.

Some examples would be the optimization of a financial plan might need the processing of several petabytes, equivalent to about 500 billion pages of printed text. Implementation of such extensive computing can only be achieved with the processing power of quantum machines.

Other Side of the Coin: The Dangers Involved with Quantum Computing

One should not be surprised by this heading. We have seen through the course of history how the advent of new technology, intended for the benefit of humankind, is followed by its misuse.

One example is Einstein’s famous equation E = mc2, which gave scientists the idea of building an atomic bomb. Although Einstein was a man of peace and his theory was never indented to be used for destructive purposes, it became so anyway; hence, with quantum computing, this unrestrained processing power can be harnessed for nefarious purposes.

Quantum Computing Puts Data Encryption Practices in a Great Peril

And as we know every precious commodity is vulnerable to vandalism, breaches, and thefts. So, to address this vulnerability, computer scientists have developed encryption modules that are used to lock the data, and only those that have the encryption key can access it, with such a password.

Unauthorized parties can’t get around this encryption without a technique called brute force cracking. But it is important to mention that brute force attacks might only work to crack simple passwords that consist of only a few bytes.

Let’s try to better understand this with the help of numbers

With today’s computers, It could take more than a billion, billion years to crack data that is protected by what is called a 128-bit encryption key, widely used by financial resources on the Internet.

A standard 128-bit key can’t get cracked by the brute force algorithm using the conventional binary coding system, but when we replace this two-state concept with a quantum bit of unlimited existing states, the tables surely get turned.

The result is that a 128-bit Key that is so formidable against the brute force of classical binary supercomputers will fall flat when quantum computing is used to carry out the brute-force algorithm.

No operating quantum machine exists today, but experts have estimated that a quantum supercomputer would be able to crack 128-bit encryption keys within 100 seconds. Compare that to the billon-billion years it would take a binary computer to crack the same code!

Aftermath

If data encryption becomes ineffective, it will expose everything to criminal elements. To understand just a fraction of this devastation, imagine that every person on earth linked to the banking system loses access to their account. The mere idea of such a situation can send chills down your spine.

Apart from that, the neutralization of data encryption can lead to cyber warfare between nation-states. Here also, rogue elements will easily be able to capitalize on the situation.

A global outbreak of war in a world with the existing eight nuclear powers can end up with a dreadful outcome. All things considered, the manifestation of quantum computing can bring along many irretrievable repercussions.

Preparation to Protect Against the Nefarious Use of Quantum Computing

Google and IBM have successfully carried out quantum computing in a controlled environment. So, to think that quantum computers are a distant reality won’t be deemed an insightful judgment. For that matter, businesses should start preparing against this abuse. There is no point in waiting for formal rules and protocols to be issued. Experts working in the area of digital security and cryptography recommend some measures to protect business data in the future from any exploitation of the quantum era.

Conclusion

How technology has progressed in the last few decades is indicative of the fact that quantum computing is the reality of the future. So, the arrival of quantum computers is not the question of ‘if’ – it’s the question of ‘when’.

Quantum theory, with all its benefits for the development of life sciences, the financial sector, and AI poses a great threat to the existing encryption system, which is central for the protection of any type of confidential data. The proper approach for any nation and business is to accept this unwanted aspect of quantum mechanics as a technological hazard and start preparing against it with the help of experts.

With that said, it will also be a blessing when used proactively for the benefit of humankind and we look forward to a better lifestyle for each of us when quantum computing becomes a reality.